There have been a handful of times over the last few years where I have needed to take time series data and group the runs of data together to determine when a certain value changed, and how long it stayed that way.

Sample Data

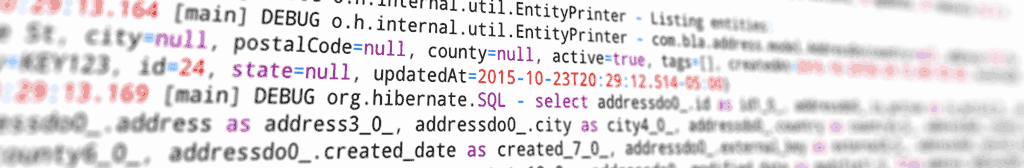

We have a log table that, after keeping only the columns relevant for this post, looks like the diagram above.

| logged_at | id | value1 |

|---|---|---|

| 2016-05-31 | 1 | 4 |

| 2016-05-31 | 2 | 5 |

| 2016-06-06 | 2 | 10 |

| 2016-06-06 | 1 | 4 |

| 2016-06-14 | 1 | 4 |

| 2016-06-14 | 2 | 10 |

| 2016-06-15 | 2 | 10 |

| 2016-06-15 | 1 | 8 |

| 2016-06-17 | 1 | 8 |

| 2016-06-17 | 2 | 10 |

| 2016-09-23 | 1 | 4 |

| 2016-09-23 | 1 | 4 |

| 2017-01-03 | 2 | 5 |

| 2017-11-20 | 1 | 8 |

| 2017-11-20 | 2 | 10 |

| 2017-11-28 | 2 | 5 |

| 2017-11-28 | 2 | 5 |

We can see in this data that the values oscillate - for id 1, value is either 4 or 8, and for id 2, the value is 5 or 10. It goes back and forth over time. We can also see that the value will repeat - maybe there are some other changes for these records, but the value field stays the same across other updates.

What we want to do is eliminate the duplicate values in the runs of data, and gather the timestamp where that value was first seen in the run, and when it was last seen.

For example, for id 1, we should end up with x rows : 4, 8, 4, 8. For id 2, we should expect to have 5 rows: 5, 10, 5, 10, 5.

Sample Code

StackOverflow was helpful in figuring out how to do this. This post closely matched what I was trying to do. I wanted to see how the row numbering worked, in particular, using two row numbers and subtracting.

Lets start with this:

SELECT

log1.logged_at,

log1.value1,

log1.id,

ROW_NUMBER() OVER ( PARTITION BY id ORDER BY logged_at ) as byId,

ROW_NUMBER() OVER ( PARTITION BY id, value1 ORDER BY logged_at ) as idValue,

ROW_NUMBER() OVER ( PARTITION BY id ORDER BY logged_at )

- ROW_NUMBER() OVER ( PARTITION BY id, value1 ORDER BY logged_at ) AS idMinusIdValue

FROM logtable log1

order by id, logged_atThis is what we get:

| logged_at | id | value1 | byId | idValue | idMinusIdValue |

|---|---|---|---|---|---|

| 2016-05-31 | 1 | 4 | 1 | 1 | 0 |

| 2016-06-06 | 1 | 4 | 2 | 2 | 0 |

| 2016-06-14 | 1 | 4 | 3 | 3 | 0 |

| 2016-06-15 | 1 | 8 | 4 | 1 | 3 |

| 2016-06-17 | 1 | 8 | 5 | 2 | 3 |

| 2016-09-23 | 1 | 4 | 6 | 4 | 2 |

| 2016-09-23 | 1 | 4 | 7 | 5 | 2 |

| 2017-11-20 | 1 | 8 | 8 | 3 | 5 |

| 2016-05-31 | 2 | 5 | 1 | 1 | 0 |

| 2016-06-06 | 2 | 10 | 2 | 1 | 1 |

| 2016-06-14 | 2 | 10 | 3 | 2 | 1 |

| 2016-06-15 | 2 | 10 | 4 | 3 | 1 |

| 2016-06-17 | 2 | 10 | 5 | 4 | 1 |

| 2017-01-03 | 2 | 5 | 6 | 2 | 4 |

| 2017-11-20 | 2 | 10 | 7 | 5 | 2 |

| 2017-11-28 | 2 | 5 | 8 | 3 | 5 |

| 2017-11-28 | 2 | 5 | 9 | 4 | 5 |

Notice that the value for idMinusValue is not sequential, but it does group together the runs of the data. idMinusValue also will repeat across ids.

Now we want to compress the runs, and sort correctly:

WITH groupings AS (

SELECT

log1.logged_at,

log1.id,

value1,

ROW_NUMBER() OVER ( PARTITION BY id ORDER BY logged_at )

- ROW_NUMBER() OVER ( PARTITION BY id, value1 ORDER BY logged_at ) AS idMinusIdValue

FROM logtable log1

), runs AS (

SELECT

id,

value1,

min(logged_at) AS first_seen,

max(logged_at) AS last_seen

FROM groupings

GROUP BY id, idMinusIdValue, value1

)

SELECT *

FROM runs

ORDER BY id, first_seen| id | value1 | first_seen | last_seen |

|---|---|---|---|

| 1 | 4 | 2016-05-31 | 2016-06-14 |

| 1 | 8 | 2016-06-15 | 2016-06-17 |

| 1 | 4 | 2016-09-23 | 2016-09-23 |

| 1 | 8 | 2017-11-20 | 2017-11-20 |

| 2 | 5 | 2016-05-31 | 2016-05-31 |

| 2 | 10 | 2016-06-06 | 2016-06-17 |

| 2 | 5 | 2017-01-03 | 2017-01-03 |

| 2 | 10 | 2017-11-20 | 2017-11-20 |

| 2 | 5 | 2017-11-28 | 2017-11-28 |

We see the expected rows - 4,8,4,8 for id 1 and 5,10,5,10,5 for id 2.